Rise of AI: Do they follow Asimov’s Three Laws?

Rise of AI: Do they follow Asimov’s Three Laws?

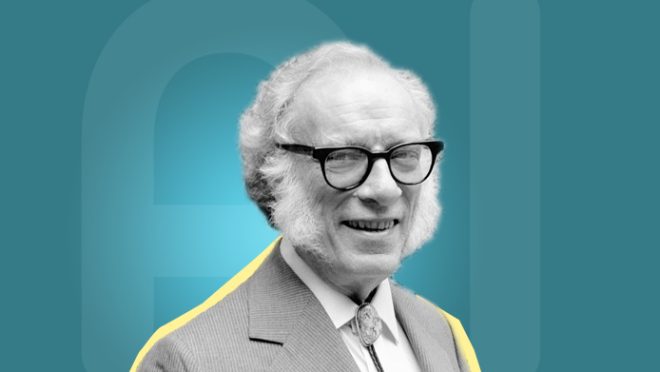

Asimov’s Three laws, or the three laws of robotics are a set of fictional rules created by author Isaac Asimov who is considered to be one of the most iconic and influential writers in the sci-fi genre. The three laws hold a high place in the sci-fi community and over the years have been discussed and depicted in many perspectives. The ethics and implications of these laws and their domain are still one of the hot topics in science fiction community; but are these only fiction?

Even a decade back, Artificial Intelligence was fiction and we could not imagine that AI would create such a buzz. Yet, here in 2024, many people are wondering if they will get sacked and instead an AI will take over their job. So perhaps it’s time to think about AI in all the possible perspective we can. And while Asimov’s laws are a set of laws made for ‘robots’ and autonomous robots are likely to function under AI, this is an interesting perspective on the AI revolution.

Before we discuss further, let’s take a look at Asimov’s three laws:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Another rule was added later by Asimov himself, that superseded the others stating that “a robot may not harm humanity, or, by inaction, allow humanity to come to harm.”

So, in essence, the rules embody the concept that regardless of whatever happens, a robot may not let a human come into harm.

At the moment, a number of AI are open for access to the public. To be more specific, these AI are AI language chatbots so naturally they do not possess a physical presence which eliminates the chances of any physical harm from them. But do they abide by these rules? Are they created to ensure safety of humanity?

When asked directly, these AI chatbots answer that they are indeed not bound by Asimov’s laws. Possibly because the rules are fictional and the chatbots are not robots. However, as funny as it is to ask the chatbots these questions, it also leaves room for serious discussion.

If they are not bound by the laws of robotics then what are they bound to? ChatGPT responds that it is bound by ethical guidelines and principles set by OpenAI which include-Safety, Privacy, Security, Fairness and Transparency. Google’s Gemini on the other hand says- “While I don’t operate under Asimov’s Laws, I am designed with safety and ethical principles in mind. My training data is carefully curated to minimize biases and promote positive outcomes.”

Further probing depicts that the chatbots are programmed to be ‘as nice as possible’ and they will often try to evade critical ethical questions. If pressed for a yes or no answer or insisted on making a decision that require morally difficult choices, they will either choose the morally admired decision or refuse to answer on the grounds that as a chatbot they are not suited to answer that question.

While it is always fun to discuss ethics and morality with an artificial intelligence, it also tells us that in terms of difficult choices, AI has a long way to go but the development in the field is very rapid. Which means that discussions about ethical guidelines and safeguards regarding AI are becoming crucial.

A number of celebrities have already been victim of misuse of AI and many artists have voiced their concerns over the open and free use of AI without concerns about others. In the coming years, AI research and development is going to receive more attention and face possibly dramatic changes. And while Asimov’s laws are fictional, it’s time to consider them seriously and ensure all AI are bound by some sort of safeguards; for our own sake.